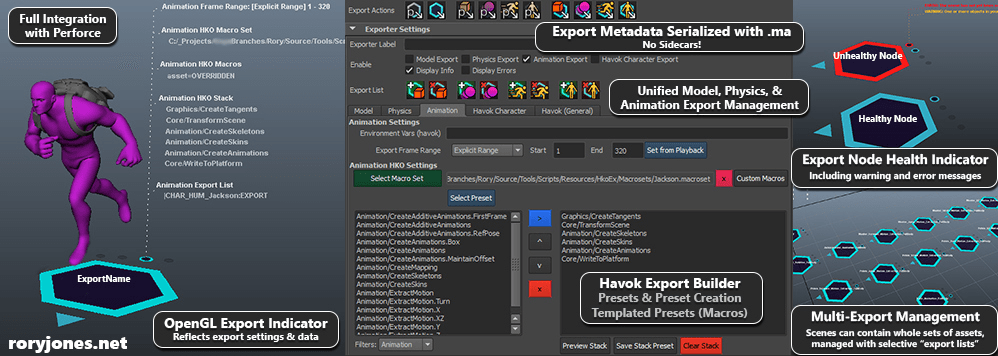

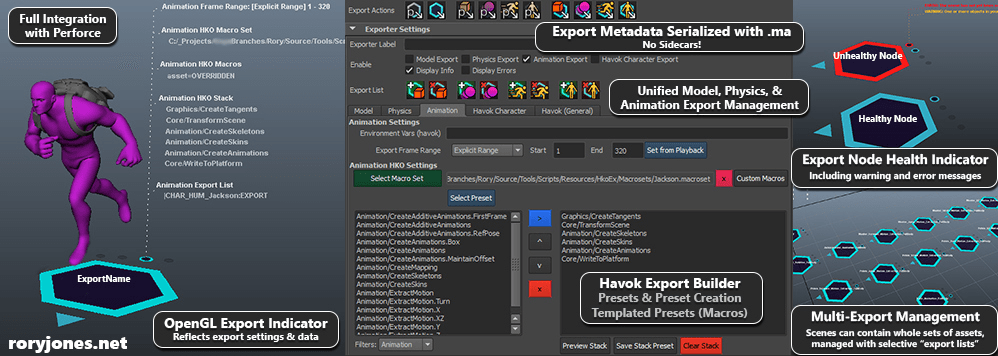

The content export pipeline was one of the longest and most involved tool creation and maintenance tasks during my time at 5TH Cell, but without a doubt was the most important to general production and the art team's ability to do their jobs effectively.

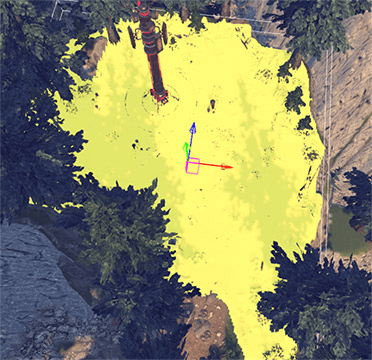

One of the most important pillars of content pipeline and management was health and export-readiness. Through past experience I've learned that there's nothing that degrades asset source files more than loose export and scene management processes. It's a hotbed for tribal knowledge and data loss. This need for simple, carefree export in any scene file, with no previous knowledge of the scene or content lead to one of the coolest aspects to the exporter pipeline: the in-scene Export Indicator.

The Export Indicator is the scene node through which you manage all export settings on a given asset. It gets rendered via some custom OpenGL I wrote and reflects the state of the node in a highly-visible way. One node per asset and you're ready to export (model, physics, animation on a single asset can all stem from one node). The addition of scene-serialized metadata and multiple nodes allowed artists to create scenes with multiple assets, modular packs ready for export, without any complicated export processes, cryptic naming conventions, or sidecar files. An artist just needed to create another node, add a suffix (to use while auto-generating the asset name), then add any desired geometries, bones, etc. to the node's Export List through the node's UI. Once an export is triggered the export process would automatically iterate over all nodes and export each selective asset from the scene hierarchy.

Our model exporter and format was proprietary (almost like a verbose obj), but all other data was being managed through Havok. I was able to unify everything into one UI, simplifying a previously disjointed and largely hidden export management workflow. The benefits of providing appropriate feedback to artists cannot be understated!

In my next pipeline post I'm going to cover the foundation of the entire pipeline at 5TH Cell - a lot of which can be credited for making these other tools so easy to create and rollout. As much work as the export process was, the base pipeline and environment was more than double the size, scope, and level of effort. I'm really excited but it's taking quite a lot of time to write and design, I might even need to break it into multiple parts! Coming soon!